One of our projects is 1) adjacent to a tidally-influenced body of water; 2) has a RCRA cap; and 3) a bunch of transducer-outfitted wells each collecting 48 records/day to prove or disprove hydraulic connection with said river. So we track environmental parameters including daily river fluctuations and local rainfall.

Enter the Internet and web scraping.

There are a ton of different ways of pulling data from the 'Net but, frankly, the easiest to validate -- prove is working to those who ask -- is the HTTP interface (type of text handled by a browser - a webpage). I found Excel is a fine way of scraping raw delimited text, then link that to Access and append new records where they belong. (As municipalities improve XML interfaces I expect to go that direction -- which should make this a little more efficient!)

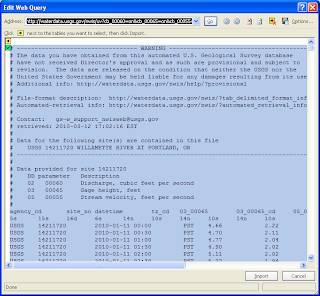

There are a ton of different ways of pulling data from the 'Net but, frankly, the easiest to validate -- prove is working to those who ask -- is the HTTP interface (type of text handled by a browser - a webpage). I found Excel is a fine way of scraping raw delimited text, then link that to Access and append new records where they belong. (As municipalities improve XML interfaces I expect to go that direction -- which should make this a little more efficient!)First -- get the river data. The USGS provides 30-minute increment stage, flow and discharge data for the river through their website. They have a whole page on automated data retrieval -- but with the usual caveats I worked the quick-n-dirty.

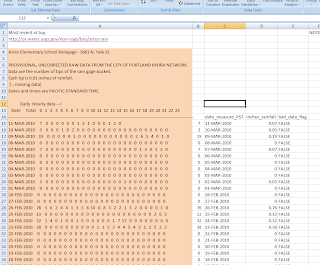

Second -- collecting precipitation data. Our City (that works!) has a nice network of automated bucket gages hosted by the USGS. Pulling that into Excel looks like this (right):

The pink-ish cells are the "scraped" HTML -- the cells to the right contain a little text handling, stuff like:

- date_PST:

- =LEFT(A15, 11)

- inch_precip:

- =IF(ISNUMBER(VALUE(B15)), VALUE(B15)*0.01, "")

- bad_data_flag:

- =IF(ISNUMBER(VALUE(B15)), "FALSE", "TRUE")

Next, I used linked tables in Access to pull this into a "staged" area -- which I think is important when you're relying on outside data -- you can control and manipulate the actual data table by using SQL queries to pull from the staged data.

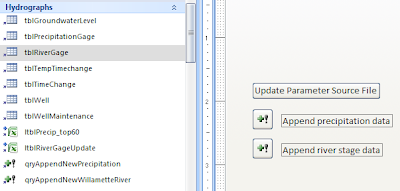

Here are the linked tables (Access shows them in little Excel icons), named ltblPrecip_top60 and ltblRiverGageUpdate:

Then the queries to pull data into tblPrecipitationGage and tblRiverGage look like this:

And...:INSERT INTO tblPrecipitationGage ( LOC_ID, FILEORIG, DTT_PST, INCH_PRECIP, DATA_FLG, UpdateUser, UpdateTime, UpdateDescription )SELECT "BES Station Number 193" AS LOC_ID, "http://or.water.usgs.gov/non-usgs/bes/astor.rain" AS FILE_ORIG, DateValue([date_measure_PST]) AS RainfallDatePST, ltblPrecip_top60.inches_rainfall, ltblPrecip_top60.bad_data_flag, fOSUserName() AS UpdateUser, Now() AS UpdateTime, "qryAppendNewPrecipitation" AS UpdateDescriptionFROM ltblPrecip_top60WHERE (((DateValue([date_measure_PST]))>(SELECT Max(tblPrecipitationGage.DTT_PST) FROM tblPrecipitationGage) And (DateValue([date_measure_PST]))<(DateValue(Now()))));

Okay, those look a little hairy -- but they aren't that bad. Really. :-)INSERT INTO tblRiverGage ( LOC_ID, FILEORIG, LoggingDTT, TimeDatum, ELEV_NAVD88, Velocity, Discharge, Data_Flag, UpdateUser, UpdateTime, UpdateDescription )SELECT ltblRiverGageUpdate.LOC_ID, "http://waterdata.usgs.gov/nwis/uv?14211720" AS FILE_ORIG, ltblRiverGageUpdate.loggingdtt, ltblRiverGageUpdate.TimeDatum, ltblRiverGageUpdate.Elevation_NAVD88, ltblRiverGageUpdate.Velocity, ltblRiverGageUpdate.Discharge, ltblRiverGageUpdate.bad_data_flag, fOSUserName() AS UpdateUser, Now() AS UpdateTime, "qryAppendNewWillametteRiver" AS UpdateDescriptionFROM ltblRiverGageUpdateWHERE (((ltblRiverGageUpdate.LOC_ID)=14211720) AND ((ltblRiverGageUpdate.loggingdtt)>(SELECT Max(tblRiverGage.LoggingDTT) FROM tblRiverGage) And (ltblRiverGageUpdate.loggingdtt)<(DateValue(Now()))))ORDER BY ltblRiverGageUpdate.loggingdtt;

Gonna have to wait until tomorrow for a reasonable explanation of those -- this is a long post, and I'm out of time. (The dog is home with her legs crossed!)

Thanks for checking this out.

No comments:

Post a Comment